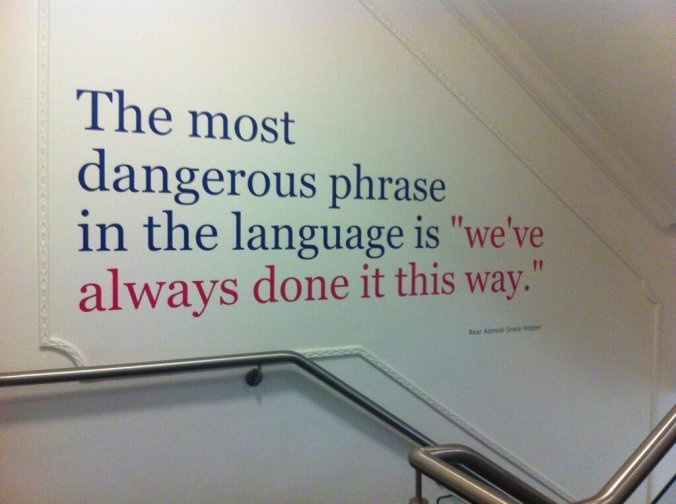

One of the first things I’ve been involved with in my new post has been the development of assessment without levels. It’s been strange for me to move back to a school still using them! I’m teaching Year 7 English and I’ve had to re-learn (temporarily at least!) the levels system to assess their assignments. What struck me particularly was the way learning gets lost when you hand back assignments with levels on them. I’d been so used to handing work back with formative comments only over the last two years that I was quite unprepared for the buzz of “what did you get?”, fist-pumping triumph when a Level 5.6 was awarded (“I was only 5.3 last time!”) and disappointment on the flip side. I had to work really hard to focus the students on my carefully crafted formative feedback and DIRT tasks – and I know that some of them only paid lip-service only to my requests to engage with the comments in a “please-the-teacher” exercise whilst their minds were still occupied with the level. All I kept thinking about was Dylan Wiliam’s advice about ego-involving and task-involving feedback:

Levels have to go, then – this is not a surprise. It’s also perhaps unsurprising that Churchill have hung on for them, with a new Headteacher incoming (especially one who has blogged extensively about assessment without levels!) My big advantage is in having implemented assessment without levels once, I can refine and develop the approach for my second go. I’m still pretty happy with the growth and thresholds model (originally proposed by Shaun Allison here) which was implemented at my previous school, but there are definitely refinements to make. In particular, a couple of posts have stuck with me in terms of reviewing the way we assess. The first is by the always-thought-provoking Daisy Christodolou, who got my mental cogs whirring in November with Comparative Judgment: 21st Century Assessment. In this post, the notion that you can criteria-reference complex tasks like essays and projects is rightly dismissed:

” [it] ends up stereotyping pupils’ responses to the task. Genuinely brilliant and original responses to the task fail because they don’t meet the rubric, while responses that have been heavily coached achieve top grades because they tick all the boxes…we achieve a higher degree of reliability, but the reliable scores we have do not allow us to make valid inferences about the things we really care about.”

Instead, Daisy argues, comparing assignments, essays and projects to arrive at a rank order allows for accurate and clear marking without referencing reams of criteria. Looking at two essays side-by-side and deciding that this one is better than that one, then doing the same for another pair and so on does seem “a bit like voodoo” and “far too easy”…

“…but it works. Part of the reason why it works is that it offers a way of measuring tacit knowledge. It takes advantage of the fact that amongst most experts in a subject, there is agreement on what quality looks like, even if it is not possible to define such quality in words. It eliminates the rubric and essentially replaces it with an algorithm. The advantage of this is that it also eliminates the problem of teaching to the rubric: to go back to our examples at the start, if a pupil produced a brilliant but completely unexpected response, they wouldn’t be penalised, and if a pupil produced a mediocre essay that ticked all the boxes, they wouldn’t get the top mark. And instead of teaching pupils by sharing the rubric with them, we can teach pupils by sharing other pupils’ essays with them – far more effective, as generally examples define quality more clearly than rubrics.”

The bear-trap of any post-levels system is always to find that you’ve accidentally re-created levels by mistake. Michael Tidd has been particularly astute about this in the primary sector: “Have we simply replaced the self-labelling of I’m a Level 3, with I’m Emerging?” This is why systems like the comparative judgment engine on the No More Marking site are useful. Deciding on a rank order allows you to plot the relative attainment of each piece of work against the cohort; “seeding” pre-standardized assignments into the cohort would then allow you to map the performance of the full range.

At this point, Tom Sherrington’s generously shared work on his assessment system using the bell curve comes to the fore. Tom first blogged about assessment, standards and the bell curve in 2013 and has since gone on to use the model in the KS3 assessment system developed at Highbury Grove. “Don’t do can do statements” he urges – echoing Daisy Christodolou’s call to move away from criteria-referencing – and instead judge progress based on starting points:

Finally, this all makes sense. This is how GCSE grades are awarded – comparable outcomes models the scores of all the students in the country based on the prior attainment model of that cohort, and shifts grade boundaries to match the bell curve of each cohort. It feels alien and wrong to teachers like me, trained in a system in which absolute criteria-referenced standards corresponded to grades, but it isn’t – it makes sense. Exams are a competition. Not everyone can get the top grades.It also makes sense pedagogically. We are no longer in a situation where students need to know specific amounts of Maths to get a C grade (after which point they can stop learning Maths); instead they need to keep learning Maths until they know as much Maths as they possibly can – at which point they will take their exams. If they know more Maths than x percentage of the rest of the country, they will get x grade. This is fair.

Within the assessment system, getting a clear and fair baseline assessment (we plan to use KS2 assessments, CATs and standardised reading test scores) will establish a starting profile. At each subsequent assessment point, whether it be in Dance, Maths, Science, History or Art, comparative judgment will be used to create a new rank order, standardised and benchmarked (possibly through “seeded” assignments or moderated judgment). Students’ relative positions at these subsequent assessment points will then allow judgments of progress: if you started low but move up, that’s good progress. If you start high but drop down, we need to look at what’s happening. Linking the assignments to a sufficiently challenging curriculum model is essential; then if one assignment is “easier” or “harder” others it won’t matter – the standard is relative.

As with all ventures in this field, it’s a tentative step. What we’ve come up with is in the developmental stage for a September launch. But moving away from criteria-referencing as the arbiter of standards has been the most difficult thing to do, because it’s all many of us have ever known. But that doesn’t make it right.

Hi Chris. A fascinating (as always) article. Isn’t the danger of the ‘rank order’ approach that it is premised on the subjectivity of an individual marker? What I’m getting at is that the virtue of criterion referencing is that there is a shared professional framework for assessment which reduces the impact of teacher subjectivity. With the added virtue of known objectives for the student to aim at it would seem to provide a fairer (dare I say ‘level’!) playing field?

Hi Mark – thanks for the comment. I’m not saying do away with criteria altogether; it is important to share “what success looks like” with students so they know what they’re aiming for, and of course there are criteria attached to the exam mark schemes which underpin national assessment. Criteria for an individual assignment or assessment are fine. What I’m saying is don’t fall into the trap of trying to tie those criteria to grades or levels or bands or descriptors. To say that “showing insight into the poet’s craft” is Grade B, or “writing in clear and structured paragraphs which are cohesive and coherent” is Grade 4 is where the trap lies – this is reinventing levels. Sitting down and comparing the work of two students and saying which is better is clear and uncontroversial – I hope! – especially if you have the get-out clause of saying they’re exactly the same standard.

The example that I think best sums this up is the child coming home from school to tell their family that they got 62% on a Maths test. On its own, this information is useless. If 62% was the lowest in the class, this is cause for concern; if the score was in the top five, then a cause for celebration. The relative score is all – whereabouts on the scale? And where does this relate to the prior attainment position? This provides the attainment and progress standards which can be used to track and assess. The same applies to any other subject, or any other piece of work, I think.

“To say that ‘showing insight into a poets’ craft’ is Grade B… is where the trap lies” – I think the point here is about not trying to assess too frequently for the purposes of measuring progress. When we want to make a progress judgement, we have to decide whether the student has reached ‘Grade B’ or ‘Level 5’ or ‘The expected standard’ – and it really doesn’t matter what we call that; the important thing is that the judgement should be based on a significant performance assessment – or a body of work that gives evidence of a number of strands or aspects that contribute to the overall assessment.

Pingback: Refining assessment without levels | rwaringatl

Reblogged this on The Echo Chamber.

Hi Chris,

So how does this transfer to parent reports? Do you report the ranking? Is there any element at KS3 of your staff extrapolating forward to benchmarking work as “on track towards…” a KS4 grade?

Yes, we are looking to extrapolate forwards but we are only doing so tentatively as we have no certainty about those grades going forward. We are planning on using the rankings to determine whereabouts students are on a scale and reporting the progress judgment. We are considering using the scale to report a 9-1 grade also but that’s under discussion.

Hi Chris, a few questions if you don’t mind. I don’t quite understand the comparative judgement approach, because all it tells me is where each student is ranked within a cohort, and not why they are ranked at that point. Apart from plotting progress from starting point ‘x’, I’m not sure how knowing where I am in relation to other people can help me improve my learning because it doesn’t give me feedback? (I’m quite possibly missing the point completely here!)

Another question is about the idea of focusing on task based rather than on ego based, when assessing. I get this, but I’m wondering how you square this with the idea of ranking (either within a task, or within growth mindset/effort grades). Surely if you rank me within a cohort, this also links to ego, because I then just go ‘oh I’m better than x or worse than y’? Doesn’t the same negative effect happen with this when it’s about effort, as well as when it’s about task?

The last question is about parents. As a parent, now that levels have gone, I feel like I am getting a lot less information than I used to. In the past I could criterion reference my kids’ level to the national curriculum, but now I feel a bit like I am swimming through mud in terms of what the assessments mean. I realise that assessment isn’t necessarily about parents, but for many parents who want to support their children’s learning, knowing that they are ’emerging’ or whatever is not very helpful. The other thing for parents and children is I always wonder how it would feel to be told from age 4 to age 16 that your child was *always* below a ‘national standard’? If we take an average to measure our children, then this is going to happen to all the parents and children below the average point. I appreciate that this happens at GCSE, but I’m not sure I would want to constantly be told I was ‘below average’ for the 12 years up to that point. Mostly I just want to know what my kids *can do* and not where they are in relation to everyone else.

Thanks, and I’m glad to hear the new role is going well!

Hi Chris, thanks for this thought provoking blog post. I agree with the idea that school based assessment can not be wholly criteria referenced (criteria is always open to interpretation – so there has to be some standardisation). However, I do think you might be veering too much the other way!

If you use a purely relative scale, you will never be able to measure whether your overall standard is improving or declining. I suppose your approach will work if you have enough of the ‘seeded’ or moderated examples to ensure that there is a clear standard underpinning your ranking system… But, isn’t that just reinventing levels afterall? Didn’t we use exemplars and moderation meetings to ensure that levelling was meaningful across a cohort?